Phoebe Product Update

A new agent architecture, the ability to tag specific resources within investigations, and a new alerts UI for filtering and grouping — here’s what we shipped this month.

🧠 A new, more accurate Logs Subagent

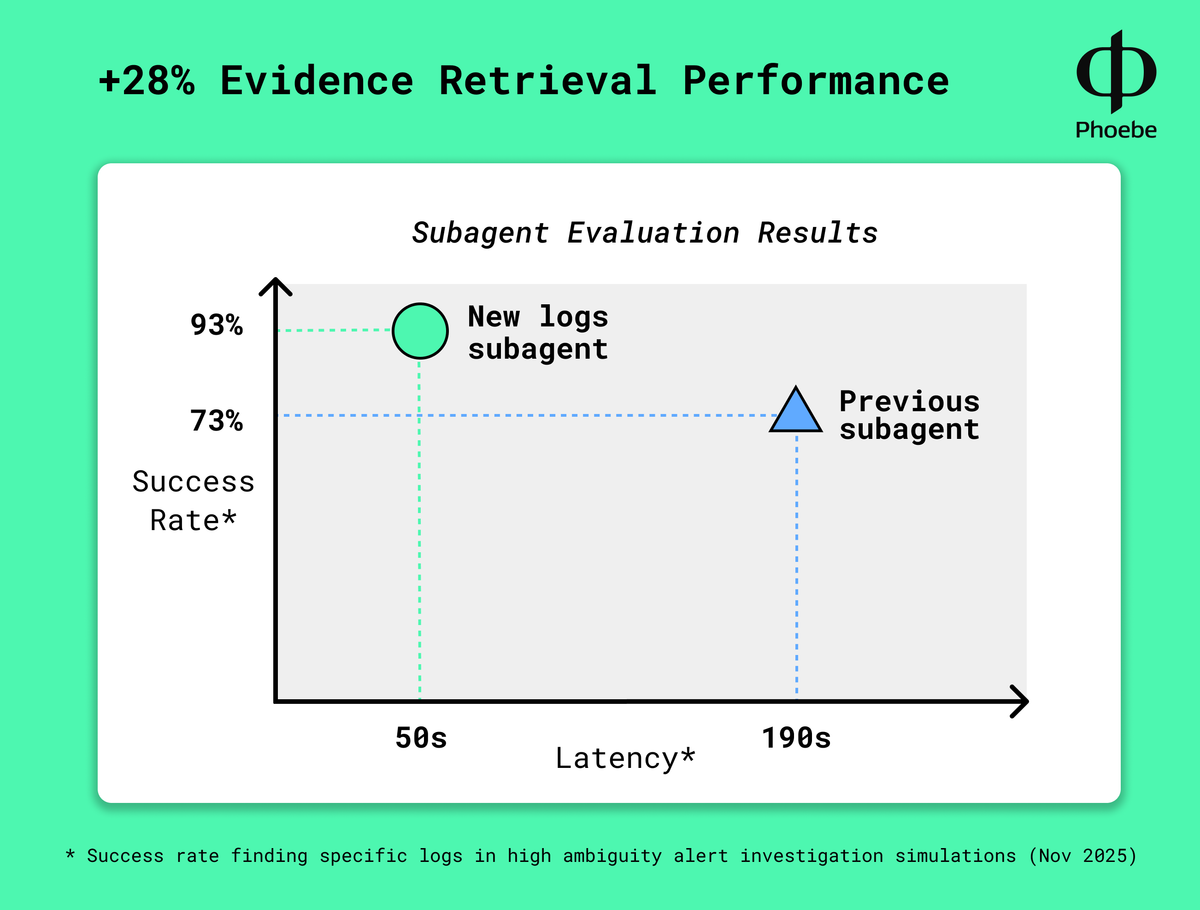

A new Logs Subagent is now live in Phoebe, bringing another leap forward in investigation performance: 28% gains in evidence retrieval with 74% latency reduction.

LLMs alone are weak at searching observability data because of high scale (trillions of tokens) and ambiguous query parameters. LLM based agents that generate queries and reattempt upon failures were a step forward.

Phoebe's new subagent architecture goes further, with an intelligent loop that iteratively refines queries to narrow down on the most relevant logs.

We test data source subagents using simulation evals - essentially measuring how consistently they can solve difficult "needle-in-haystack" challenges and find the right evidence. The new logs subagent delivered our largest performance jump yet.

🔗 @Mentions in Investigations

You can now mention resources directly within investigations using @.

Type @ to open the mention menu and link relevant services, metrics, alerts, and incidents — or use the “+ Resources” button to scan through the resources and add relevant ones inline.

This makes it easier to anchor investigations in the right context and quickly access the resources that matter to resolve specific problems.

🚨 Alerts UI

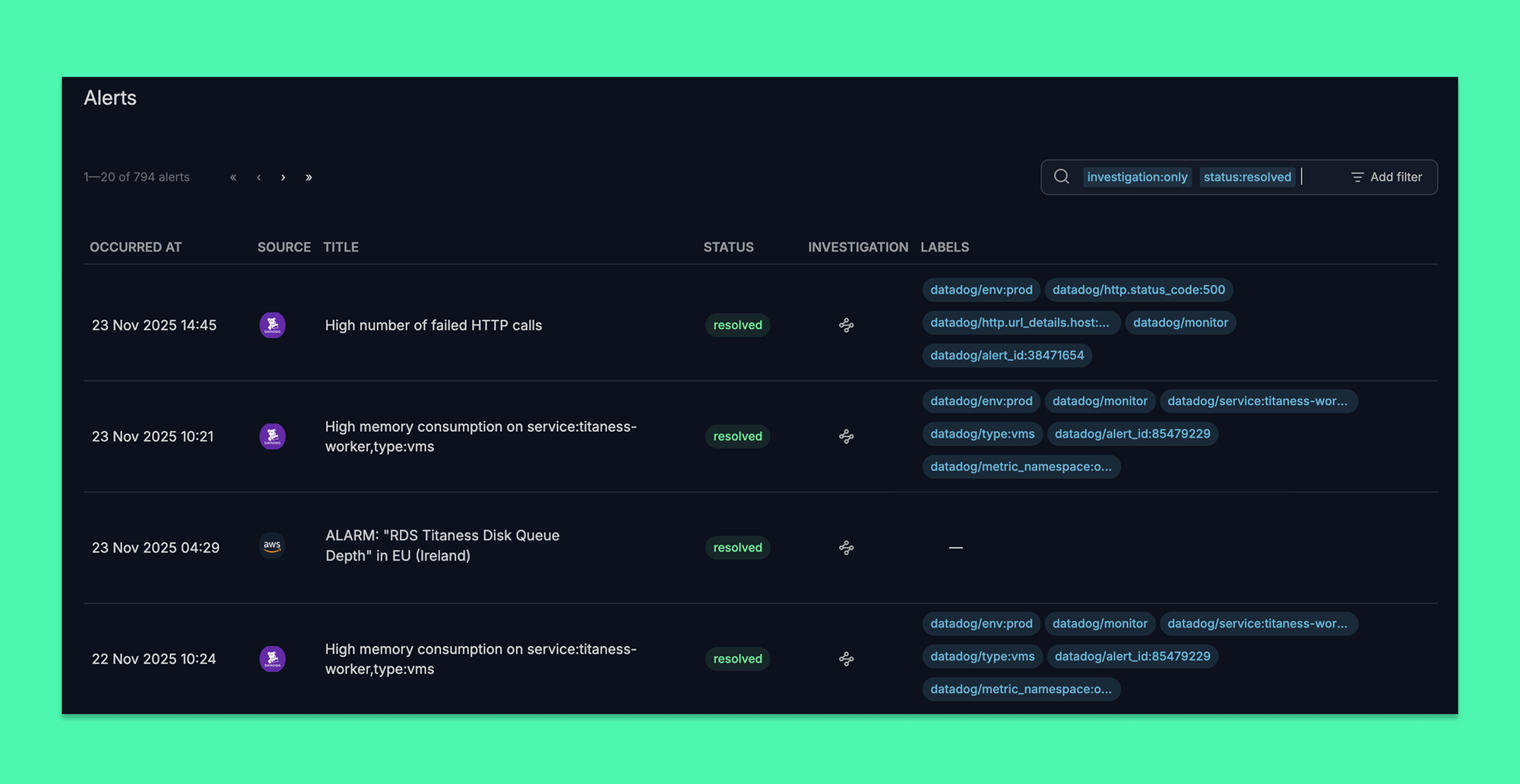

We’ve introduced a new Alerts UI — a tabular view that brings all your alerts and related investigations into one place.

The table has flexible filtering and search, so you can quickly focus on alerts by team, source, or other relevant labels. Filtering down to only the alerts you want to see is now easy.

We have a lot of plans for additional functionality for alerts (thanks to many great ideas from customers) so watch out for future updates!

Customer love

❤️ So so cool. I'm excited for my next alert so I can see it work again!

❤️ Phoebe goes so much deeper than what we were able to build in-house